Perl 6 RSS Feeds

Steve Mynott (Freenode: stmuk) steve.mynott (at)gmail.com / 2016-08-31T08:21:19Yours truly reporting en route from the YAPC::Europe 2016 in Cluj-Napoca to the Alpine Perl Workshop in Innsbruck. should be appearing shortly.

Audrey Tang, the original creator of Pugs (an earlier implementation of Perl 6 using Haskell), has been appointed as a minister without portfolio in the Taiwanese government. I think it is safe to say that the entire Perl community wishes her all the best in this new endeavour! Each time one does a spectest, remember that the The Official Perl 6 Test Suite was started as part of the Pugs project!

Bag/Mix and eqv has become a lot faster..grep or .first on a list of Pairs with a Regex now works.RAKUDO_EXCEPTIONS_HANDLER environment variable can now be set to JSON to have errors appear as a JSON stream on STDERR.Hash.classify now handles Junctions correctly.next and return are no longer allowed at the top level of the REPL.IO::Handle.comb/.split no longer work on handles that were opened in :bin mode (at least until we’ve figured out sustainable semantics of this functionality).IO::Handle.slurp-rest now supports a :close flag that will close the handle if so requested.nginx using sparrowdo and Sparrowdo::Nginx Perl6 module by Alexey Melezhik.

nginx from the source using Sparrowdo tool by Alexey Melezhik (with Reddit comments).

Quite a nice crop again this week:

It has been a busy week for yours truly with all the travelling and conference attending and socializing and sleep depriving. I hope I didn’t miss too many important things. If you think I did, let me know and we’ll let the world know the next week. Until then!

Most of my recent Perl 6 development time has been spent hunting down and fixing various concurrency bugs. We’ve got some nice language features in this area, and they’ve been pretty well received so far. However, compared with many areas of Perl 6, they have been implemented relatively recently. Therefore, they have had less time to mature – which, of course, means more bugs and other rough edges.

Concurrency bugs tend to be rather tedious to hunt down. This is in no small part because reproducing them reliably can be challenging. Even once a fairly reliable reproduction is available, working out what’s going on can be tricky. Like all debugging, being methodical and patient is the key. I tend to keep notes of things I’ve tried and observed, and output produced by instrumenting programs with logging (fancy words for “adding prints and exception throws when sanity checks fail”). I will use interactive debuggers when needed, but even then the data from them tends to end up in my editor on any extended bug hunt. Debugging is in some ways the experimental science of programming. Even with a good approach, being sufficiently patient – or perhaps just downright stubborn – matters plenty too.

In the next 2-3 posts here, I’ll discuss a few of the bugs I recently hunted down. It will not be anything close to an exhaustive list of them, which would surely be as boring for you to read as it would be for me to write. This is just the “greatest hits”, if you like.

This hunt started out looking in to various hangs that had been reported. Deadlocks are a broad category of bug (there’s another completely unrelated one I’ll cover in this little series, even). However, a number of ones that had shown up looked very similar. All of them showed a number of threads trying to enter GC, stuck in the consensus loop (which, yes, really is just a loop that we go around asking, “did every thread agree we’re going to do GC yet?”)

I’ll admit I went into this one with a bit of a bias. The GC sync-up code in question is a tad clever-looking. That doesn’t mean it’s wrong, but it makes it harder to be comfortable it’s correct. I also worried a bit that the cost of the consensus loop might well be enormous. So I let myself become a bit distracted for some minutes doing a profiling run to find out. I mean, who doesn’t want to be the person who fixed concurrency bug in the GC and made it faster at the same time?!

I’ve found a lot of useful speedups over the years using callgrind. I’ve sung its praises on this blog at least once before. By counting CPU cycles, it can give very precise and repeatable measurements on things that – measured using execution time – I’d consider within noise. With such accuracy, it must be the only profiler I need in my toolbox, right?

Well, no, of course not. Any time somebody says that X tool is The Best and doesn’t explain the context when it’s The Best, be very suspicious. Running Callgrind on a multi-threaded benchmark gave me all the ammunition I could ever want for replacing the GC sync-up code. It seemed that a whopping 30% of CPU cycles were spent in the GC consensus loop! This was going to be huuuuge…

Or was it? I mean, 35% seems just a little too huge. Profiling is vulnerable to the observer effect: the very act of measuring a program’s performance inevitably changes the program’s performance. And, while on the dubious physics analogies (I shouldn’t; I was a pretty awful physicist once I reached the relativity/QM stuff), there’s a bit of an uncertainty principle thing going on. The most invasive profilers can tell you very precisely where in your program time is spent, but you’re miles off knowing how fast you’re normally going in those parts of the program. They do this by instrumenting the program (like the current Perl 6 on MoarVM profiler does) or running it on a synthetic CPU, as Callgrind does. By contrast, a sampling profiler lets your program run pretty much as normal, and just takes regular samples of the call stack. The data is much less precise about where the program is spending its time, but it is a much better reflection of how fast the program is normally going.

So what would, say, the perf sampling profiler make of the benchmark? Turns out, well less than 1% of time was spent in the GC consensus loop in question. (Interestingly, around 5% was spent in a second GC termination consensus loop, which wasn’t the one under consideration here. That will be worth looking into in the future.) The Visual Studio sampling profiler on Windows – which uses a similar methodology – also gave a similar figure, which was somewhat reassuring also.

Also working against Callgrind is the way the valgrind suite of tools deal with multi-threaded applications – in short by serializing all operations onto a single thread. For a consensus loop, which expects to be dealing with syncing up a number of running threads, this could make a radical difference.

Finally, I decided to check in on what The GC Handbook had to say on the matter. It turns out that it suggests pretty much the kind of consensus loop we already have in MoarVM, albeit rather simpler because it’s juggling a bit less (a simplification I’m all for us having in the future too). So, we’re not doing anything so unusual, and with suitable measurements it’s performing as expected.

So, that was an interesting but eventually fairly pointless detour. What makes this all the more embarassing, however, is what came next. Running an example I knew to hang under GDB, I waited for it to do so, hit Ctrl + C, and started looking at all of the threads. Here’s a summary of their states:

And yes, for the sake of this being a nice example for the blog I perhaps should not have picked one with 17 threads. Anyway, we’ll cope. First up, the easy to explain stuff. All the threads that are “blocking on concurrent queue read (cond var wait)” are fairly uninteresting. They are Perl 6 thread pool threads waiting for their next task (that is, wanting to pull an item from the scheduler’s queue, and waiting for it to be non-empty).

Thread 01 is the thread that has triggered GC. It is trying to get consensus from other threads to begin GC. A number of other threads have already been interrupted and are also in the consensus loop (those marked “in AO_load_read at MVM_gc_enter_from_interrupt”). This is where I had initially suspected the problem would be. That leaves 4 other threads.

You might wonder how we cope with threads that are simply not in a position to participate in the consensus process, because they’re stuck in OS land, blocked waiting on I/O, a lock, a condition variable, a semaphore, a thread join, and so forth. The answer is that before they hand over control, they mark themselves as blocked. Another thread will steal their work if a GC happens while the thread is blocked. When the thread becomes unblocked, it marks itself as such. However, if a GC is already happening at that point, it’s not safe for the thread to proceed. Thus, it yields until GC is done. This accounts for the 3 threads described as “in mark thread unblocked; yielded”.

Which left one thread, which was trying to acquire a lock in order to peek a queue. The code looked like this:

if (kind != MVM_reg_obj)

MVM_exception_throw_adhoc(tc, "Can only shift objects from a ConcBlockingQueue");

uv_mutex_lock(&cbq->locks->head_lock);

while (MVM_load(&cbq->elems) == 0) {

MVMROOT(tc, root, {

Spot anything missing?

Here’s the corrected version of the code:

if (kind != MVM_reg_obj)

MVM_exception_throw_adhoc(tc, "Can only shift objects from a ConcBlockingQueue");

MVM_gc_mark_thread_blocked(tc);

uv_mutex_lock(&cbq->locks->head_lock);

MVM_gc_mark_thread_unblocked(tc);

while (MVM_load(&cbq->elems) == 0) {

MVMROOT(tc, root, {

Yup, it was failing to mark itself as blocked while contending for a lock, meaning the GC could not steal its work. So, the GC’s consensus algorithm wasn’t to blame after all. D’oh.

I actually planned to cover a second issue in this post. But, it’s already gone midnight, and perhaps that’s enough fun for one post anyway. :-) Tune in next time, for more GC trouble and another cute deadlock!

After a long trans-European journey, yours truly arrived to Cluj for the YAPC::Europe. Without a lot of time to spend on the Perl 6 Weekly. So, this time, only a small Perl 6 Weekly. Hope I didn’t miss too much!

Zoffix Znet worked very hard on the Rakudo 2016.08 Compiler Release, but he botched a tag, so now we have a Rakudo 2016.08.1 Compiler Release. I’m pretty sure we will have a new Rakudo Star release based on this Compiler Release pretty soon!

It has been a while since Leon Timmermans worked on the Perl 6 version on TAP, which allows tests (such as running with make spectest) to use Perl 6 itself to run the test-files, rather than Perl 5. For the first time, this actually completed flawlessly for yours truly in the past week:

All tests successful.

Files=1118, Tests=52595, 336 wallclock secs

Result: PASS

Again a step closer to providing the whole tool-chain in Perl 6!

Some of the core developments of the past week that made it into the 2016.08.1 release:

Set/SetHash related coercions between 40x and 300x faster.PERL6_TEST_DIE_ON_FAIL environment variable that will stop testing after the first failed test.And the blog posts keep on coming!

Quite a nice selection again!

Time to get some sleep before all the stuff starts happening in Cluj!

Useful, descriptive errors are becoming the industry standard and Perl 6 and Rust languages are leading that effort. The errors must go beyond merely telling you the line number to look at. They should point to a piece of code you wrote. They should make a guess at what you meant. They should be referencing your code, even if they originate in some third party library you’re using.

Check out The Awesome Errors of Perl 6 by Zoffix! (see also the Reddit comments).

:i, especially on large haystacks..splice at least 10x as fast. She also added another 29 ranges of unicode digit ranges to magic auto-increment / decrement.useless use” warnings.map logic for blocks that are guaranteed to not return a Slip. For instance ^10000 .map: *.Str^10000 .map: -> \x --> Str { x.Str }.:exists adverb on multi-dimensional subscript literals.ä with a even when :m (aka ignoremark) was not specified.--profile feature, which was temporarily broken.Mostly housekeeping, gfldex++ tells me:

USAGE.

gather and take.

role arguments.

Quite some nice blog posts! And some nice fixes and improvements. Can’t wait to see what we will have next week!

On my quest to help with the completion of the docs for Perl 6 I found the task to find undocumented methods quite cumbersome. There are currently 241 files that should contain method definitions and that number will likely grow with 6.d.

Luckily I wield the powers of Perl 6 what allows me to parse text swiftly and to introspect the build-in types.

71 my \methods := gather for types -> ($type-name, $path) {

72 take ($type-name, $path, ::($type-name).^methods(:local).grep({

73 my $name = .name;

74 # Some buildins like NQPRoutine don't support the introspection we need.

75 # We solve this the British way, complain and carry on.

76 CATCH { default { say "problematic method $name in $type-name" unless $name eq '<anon>'; False } }

77 (.package ~~ ::($type-name))

78 })».name)

79 }

We can turn a Str into a list of method objects by calling ::("YourTypeName").^methods(). The :local adverb will filter out all inherited methods but not methods that are provided by roles and mixins. To filter roles out we can check if the .package property of a method object is the same then the type name we got the methods from.

For some strange reason I started to define lazy lists and didn’t stop ’till the end.

52 my \ignore = LazyLookup.new(:path($ignore));

That creates a kind of lazy Hash with a type name as a key and a list of string of method names as values. I can go lazy here because I know the file those strings come from will never contain the same key twice. This works by overloading AT-KEY with a method that will check in a private Hash if a key is present. If not it will read a file line-by-line and store any found key/value pairs in the private Hash and stop when it found the key AT-KEY was asked for. In a bad case it has to read the entire file to the end. If we check only one type name (the script can and should be used to do that) we can go lucky and it wont pass the middle of the file.

54 my \type-pod-files := $source-path.ends-with('.pod6')

That lazy list produces IO::Path objects for every file under a directory that ends in ‘.pod6’ and descends into sub-directories in a recursive fashion. Or it contains no lazy list at all but a single IO::Path to the one file the script was asked to check. Perl 6 will turn a single value into a list with that value if ever possible to make it easy to use listy functions without hassle.

64 my \types := gather for type-pod-files».IO {

This lazy list is generated by turning IO::Path into a string and yank the type name out of it. Type names can be fairly long in Perl 6, e.g. X::Method::Private::Permission.

71 my \methods := gather for types -> ($type-name, $path) {

Here we gather a list of lists of method names found via introspection.

81 my \matched-methods := gather for methods -> ($type-name, $path, @expected-methods) {

This list is the business end of the script. It uses Set operators to get the method names that are in the list created by introspection (methods that are in the type object) but neither in the pod6-file nor in the ignore-methods file. If the ignore-methods is complete and correct, that will be a list of methods that we missed to write documentation for.

88 for matched-methods -> ($type-name, $path, Set $missing-methods) {

89 put "Type: {$type-name}, File: ⟨{$path}⟩";

90 put $missing-methods;

91 put "";

92 };

And then at the end we have a friendly for loop that takes those missing methods and puts them on the screen.

Looking at my work I realised that the whole script wouldn’t do squad all without that for loop. Well, it would allocate some RAM and setup a handful of objects, just to tell the GC to gobble them all up. Also there is a nice regularity with those lazy lists. They take the content of the previous list, use destructuring to stick some values into input variables that we can nicely name. Then it declares and fills a few output variables, again with proper speaking names, and returns a list of those. Ready to be destructured in the next lazy list. I can use the same output names as input names in the following list what makes good copypasta.

While testing the script and fixing a few bugs I found that any mistake I make that triggers and any pod6-file did terminate the program faster then I could start it. I got the error message I need to fix the problem as early as possible. The same is true for bugs that trigger only on some files. Walking a directory tree is not impressively fast yet, as Rakudo creates a lot of objects based on c-strings that drop out of libc, just to turn them right back into c-strings when you asked for the content of another directory of open a file. No big deal for 241 files, really, but I did have the pleasure to $work with an archive of 2.5 million files before. I couldn’t possibly drink all that coffee.

I like to ride the bicycle and as such could be dead tomorrow. I better keep programming in a lazy fashion so I get done as much as humanly possible.

EDIT: The docs where wrong about how gather/take behaves at the time of the writing of this blog post. They are now corrected what should lead to less confusion.

Zoffix answered a question about Perl 5s <> operator (with a few steps in-between as it happens on IRC) with a one liner.

slurp.words.Bag.sort(-*.value).fmt("%10s => %3d\n").say;

While looking at how this actually works I stepped on a few idioms and a hole. But first lets look at how it works.

The sub slurp will read the whole “file” from STDIN and return a Str. The method Str::words will split the string into a list by some unicode-meaning of word. Coercing the list into a Bag creates a counting Hash and is a shortcut for the following expression.

my %h; %h{$_}++ for <peter paul marry>; dd %h

# OUTPUT«Hash %h = {:marry(1), :paul(1), :peter(1)}»

Calling .sort(-*.value) on an Associative will sort by values descending and return a ordered list of Pairs. List::fmt will call Pair::fmt what calls fmt with the .key as its 2nd and .value the parameter. Say will join with a space and output to STDOUT. The last step is a bit wrong because there will be an extra space in from of each line but the first.

slurp.words.Bag.sort(-*.value).fmt(“%10s => %3d”).join(“\n”).say;

Joining by hand is better. That’s a lot for a fairly short one-liner. There is a problem though that I tripped over before. The format string is using %10s to indent and right-align the first column what works nicely unless the values wont fit anymore. So we would need to find the longest word and use .chars to get the column width.

The first thing to solve the problem was a hack. Often you want to write a small CLI tool that reads stuff from STDIN and that requires a test. There is currently no way to have a IO::Handle that reads from a Str (there is an incomplete module for writing). We don’t really need a whole class in that case because slurp will simply call .slurp-rest on $*IN.

$*IN = <peter paul marry peter paul paul> but role { method slurp-rest { self.Str } };

It’s a hack because it will fail on any form of type check and it wont work for anything but slurp. Also we actually untie STDIN from $*IN. Don’t try this at homework.

Now we can happily slurp and start counting.

my %counted-words = slurp.words.Bag; my $word-width = [max] %counted-words.keys».chars;

And continue the chain where we broke it apart.

%counted-words.sort(-*.value).fmt("%{$word-width}s => %3d").join("\n").say;

Solved but ugly. We broke a one-liner apart‽ Let’s fix fmt to have it whole again.

What we want is a method fmt that takes a Positional, a printf-style format string and a block per %* in the format string. Also we may need a separator to forward to self.fmt.

8 my multi method fmt(Positional:D: $fmt-str, *@width where *.all ~~ Callable, :$separator = " "){

9 self.fmt(

10 $fmt-str.subst(:g, "%*", {

11 my &width = @width[$++] // Failure.new("missing width block");

12 '%' ~ (&width.count == 2 ?? width(self, $_) !! width(self))

13 }), $separator);

14 }

The expression *.all ~~ Callable simply checks if all elements in the slurpy array implement CALL-ME (that’s the real method that is executed with you do foo()).

We then use subst on the format string to replace %*, whereby the replacement is a (closure) block that is called once per match. And here we have the nice idiom I stepped on.

say "1-a 2-b 3-c".subst(:g, /\d/, {<one two three>[$++]});

# OUTPUT«one-a two-b three-c»

The anonymous state variable $ is counting up by one from 0 for every execution of the block. What we actually do here is removing a loop by sneaking an extra counter and an array subscript into the loop subst must have. Or we could say that we inject an iterator pull into the loop inside subst. One could argue that subst should accept a Seq as its 2nd positional, what would make a call redundant. Anyway, we got hole plugged.

In line 11 we take one element out of the slurpy array or create a Failure if there is no element. We store the block in a variable because we want to introspect in line 12. If the block takes two Positionals, we feed the topic subst is calling the block with as a 2nd parameter to our stored block. That happens to be a Match and may be useful to react on what was matched. In our case we know that we matched on %* and the current position is counted by $++ anyway. With that done we got a format string augmented with a column provided by the user of our version of fmt.

The user supplied block is called with a list of Pairs. We have to go one level deeper to get the biggest key.

{[max] .values».keys».chars}

That’s what we have to supply to get the first columns width, in a list of Pairs dropping out of Bag.sort.

print %counted-words.sort(-*.value).&fmt("%*s => %3d", {[max] .values».keys».chars}, separator => "\n");

The fancy .&fmt call is required because our free floating method is not a method of List. That spares us to augment List.

And there you have it. Another hole in CORE plugged.

Feels like many people are enjoying the nice weather. So a bit of a quiet week. Feels a lot like the quiet before the storm, though!

AST Node annotations.loop and repeat not properly sinking (discarding generated values) by default.@a.splice($offset) about 40x faster and made sure Thai digits ("\x0e50".."\x0e59", AKA ๐๑๒๓๔๕๖๗๘๙) now smart increment/decrement correctly.gfldex reports again from the documentation effort:

Setty got completed and examples fixed.

roles was added to the typesystem documentation along with a few additions that were not documented anywhere else.

trusts trait is now documented.

Only 2 more weeks or so until the YAPC::Europe. The tension is mounting!

Because the project has not seen a release since mid-February, and there have been no commits since then, it was generally felt that the support for Parrot in NQP has become a maintenance burden, especially in the light of the development of the Javascript backend. So all code related to Parrot has been now been removed from the NQP codebase (as it was removed from the Rakudo codebase about a year ago).

It should be noted that without Parrot, Rakudo Perl 6 would not exist today. It is one of the giants on whose shoulders Rakudo Perl 6 is standing. So let me again express gratitude to the multitude of developers who have worked on the Parrot project. Even though it didn’t turn out the way it was intended, it was definitely not for nothing!

The First Alpine Perl Workshop (a cooperation of the Austrian and Swiss Perl Mongers) will be held in Innsbruck, Austria on 2 and 3 September. There is still time to submit a Perl 6 talk! If you couldn’t make it to the YAPC::Europe this year, or you have some extra time to spend after coming back from the YAPC::Europe, Innsbruck and the Alpine Perl Workshop are definitely worth looking into!

useless use” area.used that was also used inside another module and there had been a use lib inbetween the use statements.supply block. This is a big step towards more stability of asynchronous programs..min, .max and .minmax can be called on anything that can be seen as a .list.Signatures.gfldex++ reports from the Documentation Dept:

A nice harvest, with some blog posts that I missed in the past weeks.

A nice harvest as well!

See you next week!

In #perl6 we are asked on a regular basis how to flatten deep nested lists. The naive approach of calling flat doesn’t do what beginners wish it to do.

my @a = <Peter Paul> Z <70 42> Z <green yellow>;

dd @a;

# Array @a = [("Peter", IntStr.new(70, "70"), "green"), ("Paul", IntStr.new(42, "42"), "yellow")]

dd @a.flat.flat;

# ($("Peter", IntStr.new(70, "70"), "green"), $("Paul", IntStr.new(42, "42"), "yellow")).Seq

The reason why @a is so resistant to flattening is that flat will stop on the first item, what happens to be a list in the example above. We can show that with dd.

for @a { .&dd }

# List @a = $("Peter", IntStr.new(70, "70"), "green")

# List @a = $("Paul", IntStr.new(42, "42"), "yellow")

The reason why this easy thing isn’t easy is that you are not meant to do it. Flattening lists may work well with short simple list literals but will become pricey on lazy lists, supplies or 2TB of BIG DATA. Leave the data as it is and use destructuring to untangle nested lists.

If you must iterate in a flattening fashion over your data, use a lazy iterator.

sub descend(Any:D \l){ gather l.deepmap: { .take } }

dd descend @a;

# ("Peter", IntStr.new(70, "70"), "green", "Paul", IntStr.new(42, "42"), "yellow").Seq

By maintaining the structure of your data you can reuse that structure later, what includes changes in halve a year’s time.

put @a.map({ '<tr>' ~ .&descend.map({"<td>{.Str}</td>"}).join ~ "</tr>" }).join("\n");

# <tr><td>Peter</td><td>70</td><td>green</td></tr>

# <tr><td>Paul</td><td>42</td><td>yellow</td></tr>

There are quite a few constructs that will require you to flatten to feed the result to operators and loops. If you concatenate list-alikes like Ranges or Seq returned by metaops, flat will come in handy.

"BenGoldberg++ for this pretty example".trans( [ flat "A".."Z", "a".."z"] => [ flat "𝓐".."𝓩", "𝓪".."𝔃" ] ).put # OUTPUT«𝓑𝓮𝓷𝓖𝓸𝓵𝓭𝓫𝓮𝓻𝓰++ 𝓯𝓸𝓻 𝓽𝓱𝓲𝓼 𝓹𝓻𝓮𝓽𝓽𝔂 𝓮𝔁𝓪𝓶𝓹𝓵𝓮»

Nil is the little parent of Failure and represents the absence of a value. It’s the John Doe of the Perl 6 world. Its undefined nature means we can talk about it but we don’t know its value. If we try to coerce it to a presentable value we will be warned.

my $a := Nil; dd $a.Str, $a.Int; # OUTPUT«Use of Nil in string context in block <unit> at <tmp> line 1Use of Nil in numeric context in block <unit> at <tmp> line 1""0»

As the output shows it still coerces to the closest thing we have for a undefined string or number. Some times the empty string is outright dangerous.

sub somesub { Nil };

my $basename = somesub;

spurt("$basename.html", "<body>oi!</body>");

If we do that in a loop we would drop plenty of “.html” into the filesystem. Since this can depend on input data, some cunning individual might take advantage of our neglect. We can’t test for Nil in $basename, because assignment of Nil reverts the container to it’s default value. The default default value for the default type is Any. We can protect ourselves against undefined values with a :D-typesmile.

my Str:D $basename = somesub;

That would produce a runtime error for anything but Nil, because the default value for a container of type Str:D is Str:D. A type object that happens to be undefined and wont turn into anything then the empty string. Not healthy when use with filenames.

We still get the warning though, what means that warn is called. As it happens warn will raise a control exception, in this instance of type CX::Warn. We can catch that with a CONTROL block and forward it to die.

sub niler {Nil};

my Str $a = niler();

say("$a.html", "sometext");

say "alive"; # this line is dead code

CONTROL { .die };

That’s quite a good solution to handle interpolation problems stemming from undefined values. Given that any module or native function could produce undefined values makes it hard to reason about our programs. Having the control exception allows us to catch such problems anywhere in the current routine and allows us to deal with more then one place where we interpolate in one go.

Sometimes we want to stop those values early because between an assignment of a undefined value and the output of results minutes or even hours can pass by. Fail loudly and fail early they rightfully say. Type smileys can help us there but for Nil it left me with a nagging feeling. So I nagged and requested judgment, skids kindly provide a patch and judgement was spoken.

Perl 6 will be safe and sound again.

I’ve had a post in the works for a while about my work to make return faster (as well as routines that don’t return), as well as some notable multi-dispatch performance improvements. While I get over my writer’s block on that, here’s a shorter post on a number of small fixes I did on Thursday this week.

I’m actually a little bit “between things” at the moment. After some recent performance work, my next focus will be on concurrency stability fixes and improvements, especially to hyper and race. However, a little down on sleep thanks to the darned warm summer weather, I figured I’d spend a day picking a bunch of slightly less demanding bugs off from the RT queue. Some days, it’s about knowing what you shouldn’t work on…

MoarVM is somewhat lazy about a number of string operations. If you ask it to concatenate two simple strings, it will produce a string consisting of a strand list, with two strands pointing to the two strings. Similarly, a substring operation will produce a string with one strand and an offset into the original, and a repetition (using the x operator) will just produce a string with one strand pointing to the original string and having a repetition count. Note that it doesn’t currently go so far as allowing trees of strand strings, but it’s enough to prevent a bunch of copying – or at least delay it until a bunch of it can be done together and more cheaply.

The reason not to implement such cleverness is because it’s of course a whole lot more complex than simple immutable strings. And both RT #123602 and RT #127782 were about a sequence of actions that could trigger a bug. The precise sequence of actions were a repeat, followed by a concatenation, followed by a substring with certain offsets. It was caused by an off-by-one involving the repetition optimization, which was a little tedious to find but easy to fix.

RT #127749 stumbled across a case where an operation in a loop would work fine if its input was variable (like ^$n X ^$n), but fail if it were constant (such as ^5 X ^5). The X operator returns a Seq, which is an iterator that produces values once, throwing them away. Thus iterating it a second time won’t go well. The constant folding optimization is used so that things like 2 + 2 will be compiled into 4 (silly in this case, but more valuable if you’re doing things with constants). However, given the 1-shot nature of a Seq, it’s not suitable for constant folding. So, now it’s disallowed.

RT #127540 complained that an anon sub whose name happened to match that of an existing named sub in the same scope would trigger a bogus redeclaration error. Wait, you ask. Anonymous sub…whose name?! Well, it turns out that what anon really means is that we don’t install it anywhere. It can have a name that it knows itself by, however, which is useful should it show up in a backtrace, for example. The bogus error was easily fixed up.

Yes, it’s the obligatory “dive into the regex compiler” that all bug fixing days seem to come with. RT #128270 mentioned that that "a" ~~ m:g:ignoremark/<[á]>/ would whine about chr being fed a negative codepoint. Digging into the MoarVM bytecode this compiled into was pretty easy, as chr only showed up one time, so the culprit had to be close to that. It turned out to be a failure to cope with end of string, and as regex bugs go wasn’t so hard to fix.

This is one of those no impact on real code, but sorta embarrassing bugs. A (;) would cause an infinite loop of errors, and (;;) and [0;] would emit similar errors also. The hang was caused by a loop that did next but failed to consider that the iteration variable needed updating in the optimizer. The second was because of constructing bad AST with integers hanging around in it rather than AST nodes, which confused all kinds of things. And that was RT #127473.

RT #128581 pointed out that my Array[Numerix] $x spat out an error that fell rather short of the standards we aim for in Perl 6. Of course, the error should complain that Numerix isn’t known and suggest that maybe you wanted Numeric. Instead, it spat out this:

===SORRY!=== Error while compiling ./x.pl6

An exception occurred while parameterizing Array

at ./x.pl6:1

Exception details:

===SORRY!=== Error while compiling

Cannot invoke this object (REPR: Null; VMNull)

at :

Which is ugly. The line number was at least correct, but still… Anyway, a small tweak later, it produced the much better:

$ ./perl6-m -e 'my Array[Numerix] $x;'

===SORRY!=== Error while compiling -e

Undeclared name:

Numerix used at line 1. Did you mean 'Numeric'?

RT #127785 observed that using a unit sub MAIN – which takes the entire program body as the contents of the MAIN subroutine – seemed to run into trouble if the signature contained a where clause:

% perl6 -e 'unit sub MAIN ($x where { $^x > 1 } ); say "big"' 4

===SORRY!===

Expression needs parens to avoid gobbling block

at -e:1

------> unit sub MAIN ($x where { $^x > 1 }⏏ ); say "big"

Missing block (apparently claimed by expression)

at -e:1

------> unit sub MAIN ($x where { $^x > 1 } );⏏ say "big"

The error here is clearly bogus. Finding a way to get rid of it wasn’t too hard, and it’s what I ended up committing. I’ll admit that I’m not sure why the check involved was put there in the first place, however. After some playing around with other situations that it might have aided, I failed to find any. There were also no spectests that depended on it. So, off it went.

The author of RT #128552 noticed that the docs talked about $?MODULE (“what module am I currently in”), to go with $?PACKAGE and $?CLASS. However, trying it out let to an undeclared variable error. It seems to have been simply overlooked. It was easy to add, so that’s what I did. I also found some old, provisional tests and brought them up to date in order to cover it.

The submitter of RT #127394 was creative enough to try -> SomeSubtype:D $x { }. That is, take a subset type and stick a :D on it, which adds the additional constraint that the value must be defined. This didn’t go too well, resulting in some rather strange errors. It turns out that, while picking the type apart so we can code-gen the parameter binding, we failed to consider such interesting cases. Thankfully, a small refactor made the fix easy once I’d figured out what was happening.

Not bad going. Nothing earth-shatteringly exciting, but all things that somebody had run into – and so others would surely run into again in the future. And, while I’ll be getting back to the bigger, hairier things soon, spending a day making Perl 6 a little nicer in 10 different ways was pretty fun.

On behalf of the Rakudo and Perl 6 development teams, I’m pleased to announce the July 2016 release of “Rakudo Star”, a useful and usable production distribution of Perl 6. The tarball for the July 2016 release is available from http://rakudo.org/downloads/star/.

This is the third post-Christmas (production) release of Rakudo Star and implements Perl v6.c. It comes with support for the MoarVM backend (all module tests pass on supported platforms).

Please note that this release of Rakudo Star is not fully functional with the JVM backend from the Rakudo compiler. Please use the MoarVM backend only.

In the Perl 6 world, we make a distinction between the language (“Perl 6″) and specific implementations of the language such as “Rakudo Perl”. This Star release includes release 2016.07 of the Rakudo Perl 6 compiler, version 2016.07 of MoarVM, plus various modules, documentation, and other resources collected from the Perl 6 community.

Some of the new compiler features since the last Rakudo Star release include:

Compiler maintenance since the last Rakudo Star release includes:

Notable changes in modules shipped with Rakudo Star:

perl6intro.pdf has also been updated.

There are some key features of Perl 6 that Rakudo Star does not yet handle appropriately, although they will appear in upcoming releases. Some of the not-quite-there features include:

In many places we’ve tried to make Rakudo smart enough to inform the programmer that a given feature isn’t implemented, but there are many that we’ve missed. Bug reports about missing and broken features are welcomed at .

See http://perl6.org/ for links to much more information about Perl 6, including documentation, example code, tutorials, presentations, reference materials, design documents, and other supporting resources. Some Perl 6 tutorials are available under the “docs” directory in the release tarball.

The development team thanks all of the contributors and sponsors for making Rakudo Star possible. If you would like to contribute, see http://rakudo.org/how-to-help, ask on the mailing list, or join us on IRC #perl6 on freenode.

As one would expect methods can be declared and defined inside a class definition. Not so expected and even less documented are free floating methods declared with my method. Now why would you want:

my method foo(SomeClass:D:){self}

The obvious answer is the Meta Object Protocols -method, as can be found in Rakudo:

src/core/Bool.pm

32: Bool.^add_method('pred', my method pred() { Bool::False });

33: Bool.^add_method('succ', my method succ() { Bool::True });

35: Bool.^add_method('enums', my method enums() { self.^enum_values });

There is another, more sneaky use for such a method. You may want to have a look at what is going on in a chain of method calls. We could rip the expression apart and insert a one shot variable, do our debugging output, and continue in the chain. Good names are important and wasting them on one shot variables is unnecessary cognitive load.

<a b c>.&(my method ::(List:D){dd self; self}).say;

# OUTPUT«("a", "b", "c")(a b c)»

We can’t have no name without an explicit invocant, because Perl 6 wont let us, so we use the empty scope :: to make the parser happy. With a proper invocant, we would not need that. Also, the anonymous method is not a member of List. We need to use postfix .& to call it. If we need that method more then once we could pull it out and give it a name.

my multi method debug(List:D:){dd self; self};

<a b c>.&debug.say;

# OUTPUT«("a", "b", "c")(a b c)»

Or we assign it as a default argument if we want to allow callbacks.

sub f(@l, :&debug = my method (List:D:){self}) { @l.&debug.say };

f <a b c>, debug => my method ::(List:D){dd self; self};

# OUTPUT«("a", "b", "c")(a b c)»

In Perl 6 pretty much everything is a class, including methods. If it’s a class it can be an object and we can sneak those in wherever we like.

You can find the most recent version of this tutorial here.

When you suddenly get this brilliant idea, the revolutionary game-changer, all you want to do is to immediately hack some proof of concept to start small project flame from a spark of creativity. So I'll leave you alone for now, with your third mug of coffee and birds chirping first morning songs outside of the window...

...Few years later we meet again. Your proof of concept has grown into a mature, recognizable product. Congratulations! But why the sad face? Your clients are complaining that your product is slow and unresponsive? They want more features? They generate more data? And you cannot do anything about it, despite the fact that you bought the most shiny, expensive database server that is available?

When you were hacking your project on day 0, you were not thinking about the long term scalability. All you wanted to do was to create working prototype as fast as possible. So single database design was easiest, fastest and most obvious to use. You didn't think back then, that a single machine cannot be scaled up infinitely. And now it is already too late.

LOOKS LIKE YOU'RE STUCK ON THE SHORES OF [monolithic design database] HELL. THE ONLY WAY OUT IS THROUGH...

(DOOM quote)

Sharding is the process of distributing your clients data across multiple databases (called shards). By doing so you will be able to:

But if you already have single (monolithic) database this process is like converting your motorcycle into a car... while riding.

This is step-by-step guide of a a very tricky process. And the worst thing you can do is to panic because your product is collapsing under its own weight and you have a lots of pressure from clients. The whole process may take weeks, even months. Will use significant amount of human and time resources. And will pause new features development. Be prepared for that. And do not rush to the next stage until you are absolutely sure the current one is completed.

So what is the plan?

In monolithic database design data classification is irrelevant but it is the most crucial part of sharding design. Your tables can be divided into three groups: client, context and neutral.

Let's assume your product is car rental software and do a quick exercise:

+----------+ +------------+ +-----------+

| clients | | cities | | countries |

+----------+ +------------+ +-----------+

+-| id | +--| id | +--| id |

| | city_id |>--+ | country_id |>--+ | name |

| | login | | | name | +-----------+

| | password | | +------------+

| +----------+ |

| +------+

+--------------------+ |

| | +-------+

+---------------+ | | | cars |

| rentals | | | +-------+

+---------------+ | | +--| id |

+-| id | | | | | vin |

| | client_id |>-+ | | | brand |

| | start_city_id |>----+ | | model |

| | start_date | | | +-------+

| | end_city_id |>----+ |

| | end_date | |

| | car_id |>-------+ +--------------------+

| | cost | | anti_fraud_systems |

| +---------------+ +--------------------+

| | id |--+

| +-----------+ | name | |

| | tracks | +--------------------+ |

| +-----------+ |

+-----<| rental_id | |

| latitude | +--------------------------+ |

| longitude | | blacklisted_credit_cards | |

| timestamp | +--------------------------+ |

+-----------+ | anti_fraud_system_id |>-+

| number |

+--------------------------+

They contain data owned by your clients. To find them, you must start in some root table - clients in our example. Then follow every parent-to-child relation (only in this direction) as deep as you can. In this case we descend into rentals and then from rentals further to tracks. So our client tables are: clients, rentals and tracks.

Single client owns subset of rows from those tables, and those rows will always be moved together in a single transaction between shards.

They put your clients data in context. To find them, follow every child-to-parent relation (only in this direction) from every client table as shallow as you can. Skip if table is already classified. In this case we ascend from clients to cities and from cities further to countries. Then from rentals we can ascend to clients (already classified), cities (already classified) and cars. And from tracks we can ascend into rentals (already classified). So our context tables are: cities, countries and cars.

Context tables should be synchronized across all shards.

Everything else. They must not be reachable from any client or context table through any relation. However, there may be relations between them. So our neutral tables are: anti_fraud_systems and blacklisted_credit_cards.

Neutral tables should be moved outside of shards.

Take any tool that can visualize your database in the form of a diagram. Print it and pin it on the wall. Then take markers in 3 different colors - each for every table type - and start marking tables in your schema.

If you have some tables not connected due to technical reasons (for example MySQL partitioned tables or TokuDB tables do not support foreign keys), draw this relation and assume it is there.

If you are not certain about specific table, leave it unmarked for now.

Done? Good :)

Q: Is it a good idea to cut all relations between client and context tables, so that only two types - client and neutral - remain?

A: You will save a bit of work because no synchronization of context data across all shards will be required. But at the same time any analytics will be nearly impossible. For example, even simple task to find which car was rented the most times will require software script to do the join. Also there won't be any protection against software bugs, for example it will be possible to rent a car that does not even exist.

There are two cases when converting a context table to neutral table is justified:

clients, cities and countries, so cities and countries should remain as context tables.In every other case it is a very bad idea to make neutral data out of context data.

Q: Is it a good idea to shard only big tables and leave all small tables together on a monolithic database?

A: In our example you have one puffy table - tracks. It keeps GPS trail of every car rental and will grow really fast. So if you only shard this data, you will save a lot of work because there will be only small application changes required. But in real world you will have 100 puffy tables and that means 100 places in application logic when you have to juggle database handles to locate all client data. That also means you won't be able to split your clients between many data centers. Also you won't be able to reduce downtime costs to 1/nth of the amount of shards if some data corruption in monolithic database occurs and recovery is required. And analytics argument mentioned above also applies here.

It is a bad idea to do such sub-sharding. May seem easy and fast - but the sooner you do proper sharding that includes all of your clients data, the better.

There are a few design patterns that are perfectly fine or acceptable in monolithic database design but are no-go in sharding.

Aside from obvious risk of referencing nonexistent records, this issue can leave junk when you will migrate clients between shards later for load balancing. The fix is simple - add foreign key if there should be one.

The only exception is when it cannot be added due to technical limitations, such as usage of TokuDB or partitioned MySQL tables that simply do not support foreign keys. Skip those, I'll tell you how to deal with them during data migration later.

Because clients may be located on different shards, their rows may not point at each other. Typical case where it happens is affiliation.

+-----------------------+

| clients |

+-----------------------+

| id |------+

| login | |

| password | |

| referred_by_client_id |>-----+

+-----------------------+

To fix this issue you must remove foreign key and rely on software instead to match those records.

Because clients may be located on different shards their rows may not reference another client (also indirectly). Typical case where it happens is post-and-comment discussion.

+----------+ +------------+

| clients | | blog_posts |

+----------+ +------------+

+-| id |---+ | id |---+

| | login | +---<| client_id | |

| | password | | text | |

| +----------+ +------------+ |

| |

| +--------------+ |

| | comments | |

| +--------------+ |

| | blog_post_id |>-----------------+

+---<| client_id |

| text |

+--------------+

First client posted something and a second client commented it. This comment references two clients at the same time - second one directly and first one indirectly through blog_posts table. That means it will be impossible to satisfy both foreign keys in comments table if those clients are not in single database.

To fix this you must choose which relation from table that refers to multiple clients is more important, remove the other foreign keys and rely on software instead to match those records.

So in our example you may decide that relation between comments and blog_posts remains, relation between comments and clients is removed and you will use application logic to find which client wrote which comment.

This is the same issue as nested connection but caused by application errors instead of intentional design.

+----------+

| clients |

+----------+

+-------------------| id |--------------------+

| | login | |

| | password | |

| +----------+ |

| |

| +-----------------+ +------------------+ |

| | blog_categories | | blog_posts | |

| +-----------------+ +------------------+ |

| | id |----+ | id | |

+-<| client_id | | | client_id |>-+

| name | +--<| blog_category_id |

+-----------------+ | text |

+------------------+

For example first client defined his own blog categories for his own blog posts. But lets say there was mess with www sessions or some caching mechanism and blog post of second client was accidentally assigned to category defined by first client.

Those issues are very hard to find, because schema itself is perfectly fine and only data is damaged.

Client tables must be reached exclusively by descending from root table through parent-to-child relations.

+----------+

| clients |

+----------+

+-------------| id |

| | login |

| | password |

| +----------+

|

| +-----------+ +------------+

| | photos | | albums |

| +-----------+ +------------+

| | id | +---| id |

+-<| client_id | | | name |

| album_id |>---+ | created_at |

| file | +------------+

+-----------+

So we have photo management software this time and when a client synchronizes photos from a camera, new album is created automatically for better import visualization. This is an obvious issue even in monolithic database - when all photos are removed from album, then it becomes zombie row. It won't be deleted automatically by cascade and cannot be matched with client anymore. In sharding, this also causes misclassification of client table as context table.

To fix this issue foreign key should be added from albums to clients. This may also fix classification for some tables below albums, if any.

Table cannot be classified as two types at the same time.

+----------+

| clients |

+----------+

+-------------| id |-------------+

| | login | |

| | password | |

| +----------+ (nullable)

| |

| +-----------+ +-----------+ |

| | blogs | | skins | |

| +-----------+ +-----------+ |

| | id | +---| id | |

+-<| client_id | | | client_id |>-+

| skin_id |>---+ | color |

| title | +-----------+

+-----------+

In this product client can choose predefined skin for his blog. But can also define his own skin color and use it as well.

Here single interface of skins table is used to access data of both client and context type. A lot of "let's allow client to customize that" features end up implemented this way. While being a smart hack - with no table schema duplication and only simple WHERE client_id IS NULL OR client_id = 123 added to query to present both public and private templates for client - this may cause a lot of trouble in sharding.

The fix is to go with dual foreign key design and separate tables. Create constraint (or trigger) that will protect against assigning blog to public and private skin at the same time. And write more complicated query to get blog skin color.

+----------+

| clients |

+----------+

+-------------| id |

| | login |

| | password |

| +----------+

|

| +---------------+ +--------------+

| | private_skins | | public_skins |

| +---------------+ +--------------+

| | id |--+ +--| id |

+--<| client_id | | | | color |

| | color | | | +--------------+

| +---------------+ | |

| | |

| (nullable)

| | |

| | +------+

| +-----+ |

| | |

| +-----------------+ | |

| | blogs | | |

| +-----------------+ | |

| | id | | |

+------<| client_id | | |

| private_skin_id |>-+ |

| public_skin_id |>-----+

| title |

+-----------------+

However - this fix is optional. I'll show you how to deal with maintaining mixed data types in chapter about mutually exclusive IDs. It will be up to you to decide if you want less refactoring but more complicated synchronization.

Beware! Such fix may also accidentally cause another issue described below.

Every client table without unique constraint must be reachable by not nullable path of parent-to-child relations or at most single nullable path of parent-to-child relations. This is very tricky issue which may cause data loss or duplication during client migration to database shard.

+----------+

| clients |

+----------+

+-------------| id |-------------+

| | login | |

| | password | |

| +----------+ |

| |

| +-----------+ +-----------+ |

| | time | | distance | |

| +-----------+ +-----------+ |

| | id |--+ +--| id | |

+-<| client_id | | | | client_id |>-+

| amount | | | | amount |

+-----------+ | | +-----------+

| |

(nullable)

| |

| |

+--------+ +---------+

| |

| +-------------+ |

| | parts | |

| +-------------+ |

+--<| time_id | |

| distance_id |>--+

| name |

+-------------+

This time our product is application that helps you with car maintenance schedule. Our clients car has 4 tires that must be replaced after 10 years or 100000km and 4 spark plugs that must be replaced after 100000km. So 4 indistinguishable rows for tires are added to parts table (they reference both time and distance) and 4 indistinguishable rows are added for spark plugs (they reference only distance).

Now to migrate client to shard we have to find which rows from parts table does he own. By following relations through time table we will get 4 tires. But because this path is nullable at some point we are not sure if we found all records. And indeed, by following relations through distance table we found 4 tires and 4 spark plugs. Since this path is also nullable at some point we are not sure if we found all records. So we must combine result from time and distance paths, which gives us... 8 tires and 4 spark plugs? Well, that looks wrong. Maybe let's group it by time and distance pair, which gives us... 1 tire and 1 spark plug? So depending how you combine indistinguishable rows from many nullable paths to get final row set, you may suffer either data duplication or data loss.

You may say: Hey, that's easy - just select all rows through time path, then all rows from distance path that do not have time_id, then union both results. Unfortunately paths may be nullable somewhere earlier and several nullable paths may lead to single table, which will produce bizarre logic to get indistinguishable rows set properly.

To solve this issue make sure there is at least one not nullable path that leads to every client table (does not matter how many tables it goes through). Extra foreign key should be added between clients and part in our example.

TL;DR

Q: How many legs does the horse have?

A1: Eight. Two front, two rear, two left and two right.

A2: Four. Those attached to it.

MySQL specific issue.

CREATE TABLE `foo` (

`id` int(10) unsigned DEFAULT NULL,

KEY `id` (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

CREATE TABLE `bar` (

`foo_id` int(10) unsigned NOT NULL,

KEY `foo_id` (`foo_id`),

CONSTRAINT `bar_ibfk_1` FOREIGN KEY (`foo_id`) REFERENCES `foo` (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

mysql> INSERT INTO `foo` (`id`) VALUES (1);

Query OK, 1 row affected (0.01 sec)

mysql> INSERT INTO `foo` (`id`) VALUES (1);

Query OK, 1 row affected (0.00 sec)

mysql> INSERT INTO `bar` (`foo_id`) VALUES (1);

Query OK, 1 row affected (0.01 sec)

Which row from foo table is referenced by row in bar table?

You don't know because behavior of foreign key constraint is defined as "it there any parent I can refer to?" instead of "do I have exactly one parent?". There are no direct row-to-row references as in other databases. And it's not a bug, it's a feature.

Of course this causes a lot of weird bugs when trying to locate all rows that belong to given client, because results can be duplicated on JOINs.

To fix this issue just make sure every referenced column (or set of columns) is unique. They must not be nullable and must all be used as primary or unique key.

Rows in the same client table cannot be in direct relation. Typical case is expressing all kinds of tree or graph structures.

+----------+

| clients |

+----------+

| id |----------------------+

| login | |

| password | |

+----------+ |

|

+--------------------+ |

| albums | |

+--------------------+ |

+---| id | |

| | client_id |>-+

+--<| parent_album_id |

| name |

+--------------------+

This causes issues when client data is inserted into target shard.

For example in our photo management software client has album with id = 2 as subcategory of album with id = 1. Then he flips this configuration, so that the album with id = 2 is on top. In such scenario if database returned client rows in default, primary key order then it won't be possible to insert album with id = 1 because it requires presence of album with id = 2.

Yes, you can disable foreign key constraints to be able to insert self-referenced data in any order. But by doing so you may mask many errors - for example broken references to context data.

For good sharding experience all relations between rows of the same table should be stored in separate table.

+----------+

| clients |

+----------+

| id |----------------------+

| login | |

| password | |

+----------+ |

|

+--------------------+ |

| albums | |

+--------------------+ |

+-+=====| id | |

| | | client_id |>-+

| | | name |

| | +--------------------+

| |

| | +------------------+

| | | album_hierarchy |

| | +------------------+

| +---<| parent_album_id |

+-----<| child_album_id |

+------------------+

Triggers cannot modify rows. Or roar too loud :)

+----------+

| clients |

+----------+

+----------| id |------------------+

| | login | |

| | password | |

| +----------+ |

| |

| +------------+ +------------+ |

| | blog_posts | | activities | |

| +------------+ +------------+ |

| | id | | id | |

+-<| client_id | | client_id |>-+

| content | | counter |

+------------+ +------------+

: :

: :

(on insert post create or increase activity)

This is common usage of a trigger to automatically aggregate some statistics. Very useful and safe - doesn't matter which part of application adds new blog post, activities counter will always go up.

However, when sharding this causes a lot of trouble when inserting client data. Let's say he has 4 blog posts and 4 activities. If posts are inserted first they bump activity counter through trigger and we have collision in activties table due to unexpected row. When activities are inserted first they are unexpectedly increased by posts inserts later, ending with invalid 8 activities total.

In sharding triggers can only be used if they do not modify data. For example it is OK to do sophisticated constraints using them. Triggers that modify data must be removed and their logic ported to application.

Check if there are any issues described above in your printed schema and fix them.

And this is probably the most annoying part of sharding process as you will have to dig through a lot of code. Sometimes old, untested undocumented and unmaintained.

When you are done your printed schema on the wall should not contain any unclassified tables.

Ready for next step?

It is time to dump monolithic database complete schema (tables, triggers, views and functions/procedures) to the shard_schema.sql file and prepare for sharding environment initialization.

Move all tables that are marked as neutral from shard_schema.sql file to separate neutral_schema.sql file. Do not forget to also move triggers, views or procedures associated with them.

Every primary key on shard should be of unsigned bigint type. You do not have to modify your existing schema installed on monolithic database. Just edit shard_schema.sql file and massively replace all numeric primary and foreign keys to unsigned big integers. I'll explain later why this is needed.

Dispatcher tells on which shard specific client is located. Absolute minimum is to have table where you will keep client id and shard number. Save it to dispatch_schema.sql file.

More complex dispatchers will be described later.

From monolithic database dump data for neutral tables to neutral_data.sql file and for context tables to context_data.sql file. Watch out for tables order to avoid breaking foreign keys constraints.

You should have shard_schema.sql, neutral_schema.sql, dispatch_schema.sql, neutral_data.sql and context_data.sql files.

At this point you should also freeze all schema and common data changes in your application until sharding is completed.

Finally you can put all those new, shiny machines to good use.

Typical sharding environment contains of:

Each database should of course be replicated.

Nothing fancy, just regular database. Install neutral_schema.sql and feed neutral_data.sql to it.

Make separate user for application with read-only grants to read neutral data and separate user with read-write grants for managing data.

Every time client logs in to your product you will have to find which shard he is located on. Make sure all data fits into RAM, have a huge connection pool available. And install dispatch_schema.sql to it.

This is a weak point of all sharding designs. Should be off-loaded by various caches as much as possible.

They should all have the same power (CPU/RAM/IO) - this will speed things up because you can just randomly select shard for your new or migrated client without bothering with different hardware capabilities.

Configuration of shard databases is pretty straightforward. For every shard just install shard_schema.sql, feed context_data.sql file and follow two more steps.

Remember that context tables should be identical on all shards. Therefore it is a good idea to have separate user with read-write grants for managing context data. Application user should have read-only access to context tables to prevent accidental context data change.

This ideal design may be too difficult to maintain - every new table will require setting up separate grants. If you decide to go with single user make sure you will add some mechanism that monitors context data consistency across all shards.

Primary keys in client tables must be globally unique across whole product.

First of all - data split is a long process. Just pushing data between databases may take days or even weeks! And because of that it should be performed without any global downtime. So during monolithic to shard migration phase new rows will still be created in monolithic database and already migrated users will create rows on shards. Those rows must never collide.

Second of all - sharding does not end there. Later on you will have to load balance shards, move client between different physical locations, backup and restore them if needed. So rows must never collide at any time of your product life.

How to achieve that? Use offset and increment while generating your primary keys.

MySQL has ready to use mechanism:

auto_increment_increment - Set this global variable to 100 on all of your shards. That is also the maximum amount of shards you can have. Be generous here, as it will not be possible to change it later! You must have spare slots even if you don't have such amount of shards right now!auto_increment_offset - Set this global value differently on all of your shards. First shard should get 1, second shard should get 2, and so on. Of course you cannot exceed value of auto_increment_increment.Now your first shard for any table will generate 1, 101, 201, 301, ... , 901, 1001, 1101 auto increments and second shard will generate 2, 102, 202, 302, ... , 902, 1002, 1102 auto increments. And that's all! Your new rows will never collide, doesn't matter which shard they were generated on and without any communication between shards needed.

TODO: add recipes for another database types

Now you should understand why I've told you to convert all numerical primary and foreign keys to unsigned big integers. The sequences will grow really fast, in our example 100x faster than on monolithic database.

Remember to set the same increment and corresponding offsets on replicas. Forgetting to do so will be lethal to whole sharding design.

Your database servers should be set up. Check routings from application, check user grants. And again - remember to have correct configurations (in puppet for example) for shards and their replicas offsets. Do some failures simulations.

And move to the next step :)

Q: Can I do sharding without dispatch database? When client wants to log in I can just ask all shards for his data and use the one shard that will respond.

A: No. This may work when you start with three shards, but when you have 64 shards in 8 different data centers such fishing queries become impossible. Not to mention you will be very vulnerable to brute-force attacks - every password guess attempt will be multiplied by your application causing instant overload of the whole infrastructure.

Q: Can I use any no-SQL technology for dispatch and neutral databases?

A: Sure. You can use it instead of traditional SQL or as a supplementary cache.

There will be additional step in your product. When user logs in then dispatch database must be asked for shard number first. Then you connect to this shard and... it works! Your code will also have to use separate database connection to access neutral data. And it will have to roll shard when new client registers and note this selection in dispatch database.

That is the beauty of whole clients sharding - majority of your code is not aware of it.

If you modify neutral data this change should be propagated to every neutral database (you may have more of those in different physical locations).

Same thing applies to context data on shard, but all auto increment columns must be forced. This is because every shard will generate different sequence. When you execute INSERT INTO skins (color) VALUES ('#AA55BC') then shard 1 will assign different IDs for them than shard 2. And all client data that reference this language will be impossible to migrate between shards.

Dispatch serves two purposes. It allows you to find client on shard by some unique attribute (login, email, and so on) and it also helps to guarantee such uniqueness. So for example when new client is created then dispatch database must be asked if chosen login is available. Take an extra care of dispatch database. Off-load as much as you can by caching and schedule regular consistency checks between it and the shards.

Things get complicated if you have shards in many data centers. Unfortunately I cannot give you universal algorithm of how to keep them in sync, this is too much application specific.

Because your clients data will be scattered across multiple shard databases you will have to fix a lot of global queries used in analytical and statistical tools. What was trivial previously - for example SELECT city.name, COUNT(*) AS amount FROM clients JOIN cities ON clients.city_id = cities.id GROUP BY city.name ORDER BY amount DESC LIMIT 8 - will now require gathering all data needed from shards and performing intermediate materialization for grouping, ordering and limiting.

There are tools that helps you with that. I've tried several solutions, but none was versatile, configurable and stable enough that I could recommend it.

We got to the point when you have to switch your application to sharding flow. To avoid having two versions of code - old one for still running monolithic design and a new one for sharding, we will just connect monolithic database as another "fake" shard.

First you have to deal with auto increments. Set up in the configuration the same increment on your monolithic database as on shards and set up any free offset. Then check what is the current auto increment value for every client or context table and set the same value for this table located on every shard. Now your primary keys won't collide between "fake" and real shards during the migration. But beware: this can easily overflow tiny or small ints in your monolithic database, for example just adding three rows can overflow tiny int unsigned capacity of 255.

After that synchronize data on dispatch database - every client you have should point to this "fake" shard. Deploy your code changes to use dispatch logic.

You should be running code with complete sharding logic but on reduced environment - with only one "fake" shard made out of your monolithic database. You may also already enable creating new client accounts on your real shards.

Tons of small issues to fix will pop up at this point. Forgotten pieces of code, broken analytics tools, broken support panels, need of neutral or dispatch databases tune up.

And when you squash all the bugs it is time for grande finale: clients migration.

You do not need any global downtime to perform clients data migration. Disabling your whole product for a few weeks would be unacceptable and would cause huge financial loses. But you need some mechanism to disable access for individual clients while they are migrated. Single flag in dispatch databases should do, but your code should be aware of it and present nice information screen for client when he tries to log in. And of course do not modify clients data.

If you have some history of your client habits - use it. For example if client is usually logging in at 10:00 and logging out at 11:00 schedule his migration to another hour. You may also figure out which timezones clients are in and schedule migration for the night for each of them. The migration process should be as transparent to client as possible. One day he should just log in and bam - fast and responsive product all of a sudden.

Exodus was the tool used internally at GetResponse.com to split monolithic database into shards. And is still used to load balance sharding environment. It allows to extract subset of rows from relational database and build series of queries that can insert those rows to another relational database with the same schema.

Fetch Exodus.pm and create exodus.pl file in the same directory with the following content:

#!/usr/bin/env perl

use strict;

use warnings;

my $database = DBI->connect(

'DBI:mysql:database=test;host=localhost',

'root', undef,

{'RaiseError' => 1}

);

my $exodus = Exodus->new(

'database' => $database,

'root' => 'clients',

);

$exodus->extract( 'id' => 1 );

Of course provide proper credentials to connect to your monolithic database.

Now when you call the script it will extract all data for client represented by record of id = 1 in clients root table. You can directly pipe it to another database to copy the client there.

./exodus.pl | mysql --host=my-shard-1 --user=....

Update dispatch shard for this client, check that product works for him and remove his rows from monolithic database.

Repeat for every client.

Do not use user with SUPER grant to perform migration. Not because it is unsafe, but because they do not have locales loaded by default. You may end up with messed character encodings if you do so.

MySQL is quite dumb when it comes to cascading DELETE operations. If you have such schema

+----------+

| clients |

+----------+

+----------| id |----------------+

| | login | |

| | password | |

| +----------+ |

| |

| +-----------+ +-----------+ |

| | foo | | bar | |

| +-----------+ +-----------+ |

| | id |----+ | id | |

+-<| client_id | | | client_id |>-+

+------------+ +--<| foo_id |

+----------+

and all relations are ON DELETE CASCADE then sometimes it cannot resolve proper order and may try to delete data from foo table before data from bar table, causing constraint error. In such cases you must help it a bit and manually delete clients data from bar table before you will be able to delete row from clients table.

Exodus allows you to manually add missing relations between tables, that couldn't be created in the regular way for some reasons (for example table engine does not support foreign keys).

my $exodus = Exodus->new(

'database' => $dbh,

'root' => 'clients',

'relations' => [

{

'nullable' => 0,

'parent_table' => 'foo',

'parent_column' => 'id'

'child_table' => 'bar',

'child_column' => 'foo_id',

},

{ ... another one ...}

]

);

Note that if foreign key column can be NULL such relation should be marked as nullable.

Also remember to delete rows from such table when your client migration is complete. Due to lack of foreign key it won't auto cascade.

When all of your clients are migrated simply remove your "fake" shard from infrastructure.

I have a plans of refactoring this code to Perl 6 (it was prototyped in Perl 6, although back in the days when GetResponse introduced sharding Perl 6 was not fast or stable enough to deal with terabytes of data). It should get proper abstracts, more engines support and of course good test suite.

YAPC NA 2016 "Pull request challenge" seems like a good day to begin :)

If you have any questions about database sharding or want to contribute to this guide or Exodus tool contact me in person or on IRC as "bbkr".

YOU'VE PROVEN TOO TOUGH FOR [monolithic database design] HELL TO CONTAIN

(DOOM quote)

I don't know anything, but I do know that everything is interesting if you go into it deeply enough. — Richard Feynman

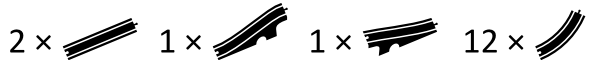

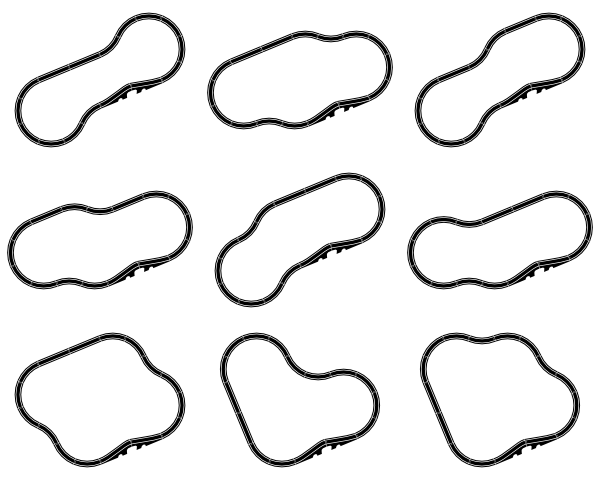

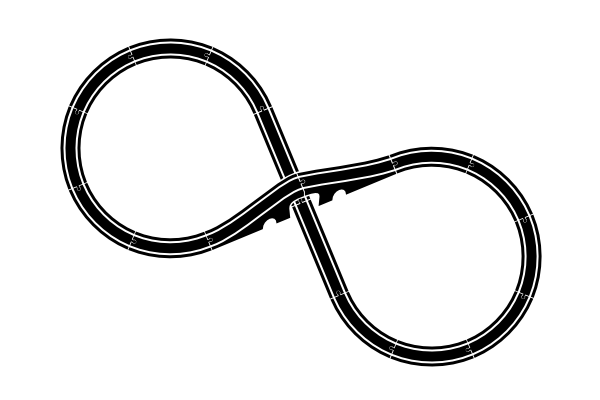

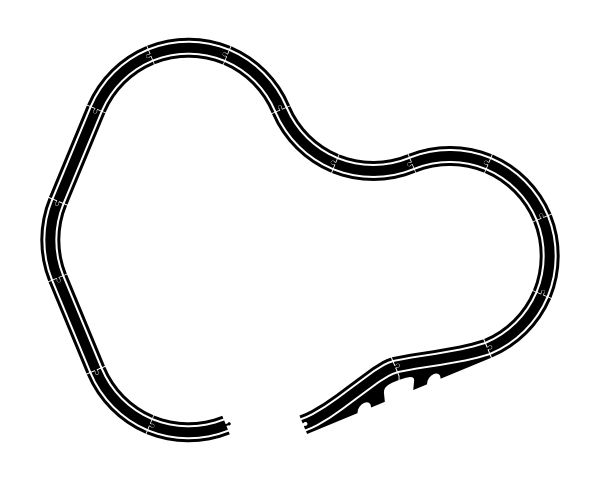

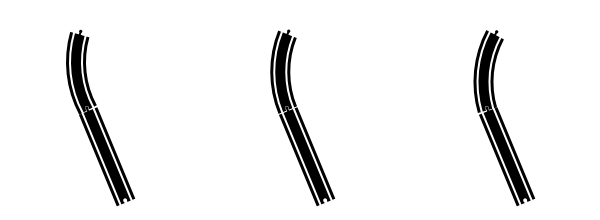

Someone gives you a box with these pieces of train track:

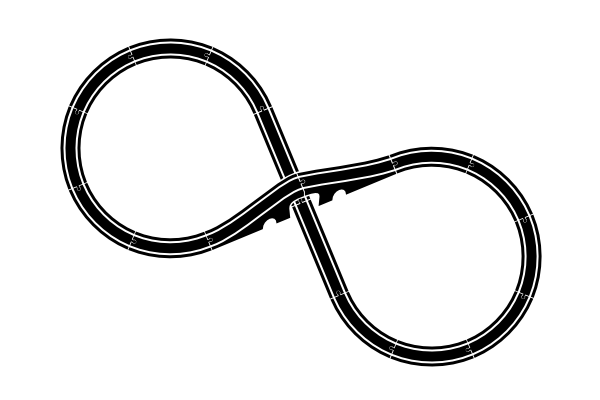

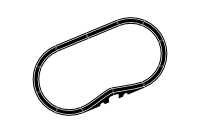

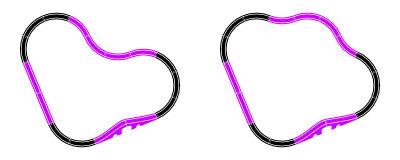

It's possible you quickly spot the one obvious way to put these pieces into one single train track:

But... if you're like me, you might stop and wonder:

Is that the only possible track? Or is there some equally possible track one could build?

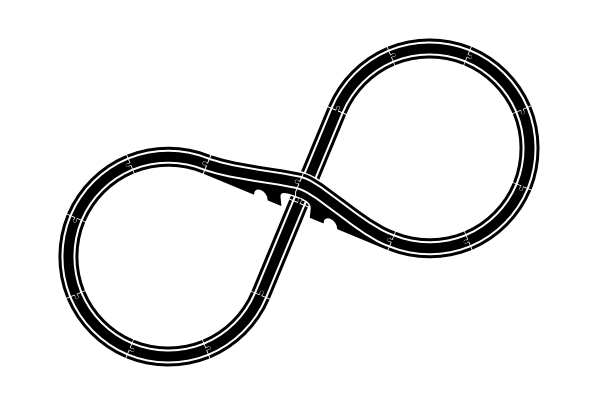

I don't just mean the mirror image of the track, which is obviously a possibility but which I consider equivalent:

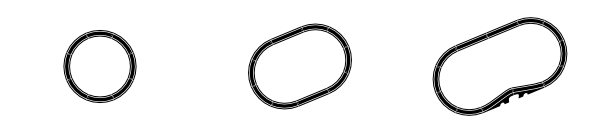

I will also not be interested in complete tracks that fail to use all of the pieces:

I would also reject all tracks that are physically impossible because of two pieces needing to occupy the same points in space. The only pieces which allow intersections even in 2D are the two bridge pieces, which allow something to pass under them.

I haven't done the search yet. I'm writing these words without first having written the search algorithm. My squishy, unreliable wetware is pretty certain that the obvious solution is the only one. I would love to be surprised by a solution I didn't think of!

Here goes.

❦

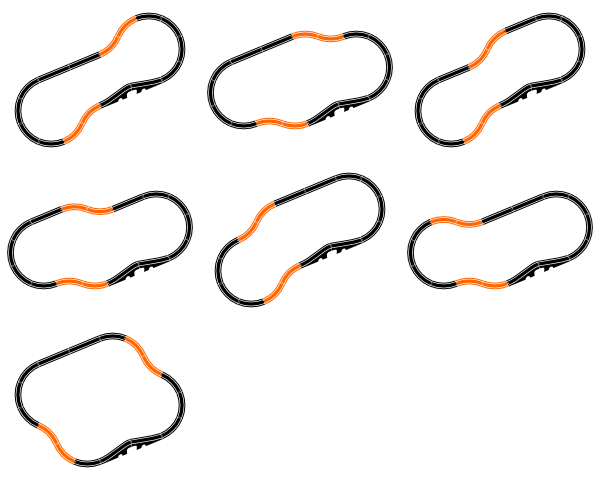

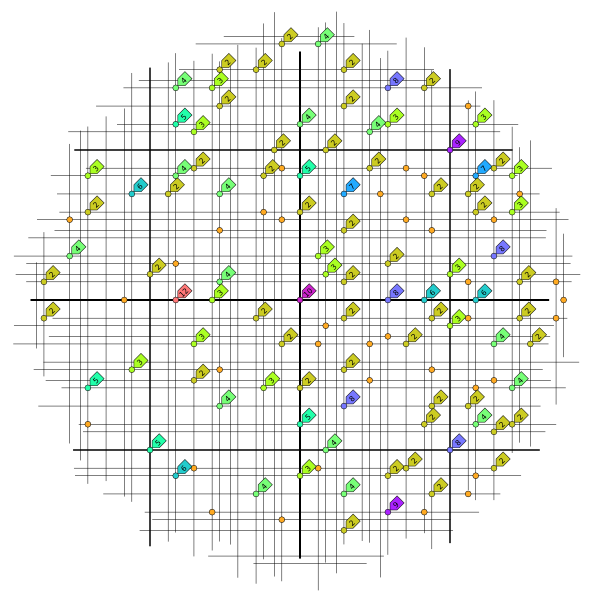

Yep, I was surprised. By no less than nine other solutions, in fact. Here they are.

I really should have foreseen most of those solutions. Here's why. Already above the fold I had identified what we could call the "standard loop":

This one is missing four curve pieces:

But we can place them in a zig-zag configuration...

...which work a little bit like straight pieces in that they don't alter the angle. And they only cause a little sideways displacement. Better yet, they can be inserted into the standard loop so they cancel each other out. This accounts for seven solutions:

If we combine two zig-zag pieces in the following way:

...we get a piece which can exactly cancel out two orthogonal sets of straight pieces:

This, in retrospect, is the key to the final two solutions, which can now be extended from the small round track:

If I had required that the track pass under the bridge, then we are indeed back to only the one solution:

(But I didn't require that, so I accept that there are 10 solutions, according to my original search parameters.)

❦

But then reality ensued, and took over my blog post. Ack!

For fun, I just randomly built a wooden train track, to see if it was on the list of ten solutions. It wasn't.

When I put this through my train track renderer, it comes up with a track that doesn't quite meet up with itself:

But it works with the wooden pieces. Clearly, there is some factor here that we're not really accounting for.

That factor turns out to be wiggle, the small amounts that two pieces can shift and rotate around the little connector that joins them: